In principle, entire evaluations can be done using qualitative research methods, without any statistical data, and many have been done this way. Think about the work of a restaurant critic, for example. She arrives at the restaurant – perhaps with a notepad – tastes the food, makes notes, then returns to her desk to write at length about how her experience compares to her standards for restaurants of this kind. There are no numbers, no experimental design, no randomization, but this is undeniably an evaluation.

If evaluation can be done this way, then why do some people have such a strong preference for statistics to begin with? My favorite answer at the moment is an information theoretic one: the methods commonly taught in introductory statistics courses are conventional ways of aggregating large amounts of information, reducing its complexity, and preparing it for decision-making.1 There are, of course, alternative ways of doing this. Below a certain level of complexity and upon familiar terrain, the human mind does it quite well unassisted. Picture two historians discussing a question like “What would have happened if Stalin had been prevented from taking control over the Bolshevik party?” – they will also aggregate large amounts of information, reduce its complexity, and boil things down to a few key (historical) decision points.2

Granting that we can use qualitative methods throughout the evaluation process if we wish, in this newsletter I’m going to point to some areas where I think we can use them the most effectively. I think that there are five critical moments when qualitative methods are most useful during the evaluation, and incidentally appear to vastly outperform statistical methods at these moments. However, I think there’s a good chance that these probably aren’t the moments that most evaluators are using qualitative methods – a situation I’ll address at the end of the essay.

#1. Defining the evaluand

It is not a given that we understand the nature of what we are evaluating. The safe assumption is that we do not. In program evaluation, you may be given documentation about what the program is supposed to do – usually information provided to the funder or the public as well – but you should not take this information at face value. Programs quickly drift into new configurations and behaviors for a variety of reasons, not all of which are adaptive. In product evaluation, you will be given the same pitch as a potential customer plus some additional behind-the-curtain insights. In reality, you’ll only be able to understand the product when you personally get your hands on it.

Qualitative research illuminates the characteristics of the evaluand at this early stage. For example, there is a lot of value in indexing and coding documentation, particularly when there is a lot of it. To this, you can add a round of semi-structured interviews with key stakeholders focused entirely on the nature of the evaluand. You will use the interviews to check your understanding of how the evaluand works in reality versus the documentation. Learning ethnographic methods will help you during site visits and while watching users in naturalistic settings.

Using qualitative methods to develop and check the definition of the evaluand is a high-leverage strategy. Having a more complete picture of the evaluand and how it works allows you to move more confidently through the subsequent phases of the project. You avoid wasting time planning around misconceptions about how the program operates. Nothing that is “obvious” to program line staff should come as a surprise to the evaluator after several months working on the project, and qualitative investigations of the definition of the evaluand front-load your learning process. If you are working on a team, conducting an initial qualitative study on the evaluand can be a good use of time for the most senior team member, who can then design a very tailored study for the rest of the team to execute under her advisement.

#2. Setting Benchmarks

Determining criteria and standards (which I collectively call “benchmarks”) is the hardest theoretical problem in evaluation. To do it well, we ideally need to combine normative, predictive, and descriptive reasoning. Good statistical skills will help, but they won’t take you all the way there. You’re also going to want knowledge of philosophy, logic, evaluation theory, and local needs. It’s these latter that qualitative methods help you systematically document.

Consider the setting of criteria, which are the general dimensions of merit and worth of the evaluand. Most of these dimensions of merit and worth follow easily from the definition of the evaluand. The definition of a alcohol abuse treatment program is that it is a program that helps people stop drinking dangerous amounts of alcohol, so the primary criterion for what makes such a program “good” is that it succeeds in reducing the rate at which participants drink. No qualitative research needed so far. However, there are usually some secondary objectives that the program designers have in mind, often intermediate steps in the logic model that are thought to be worthy criteria of success. These often include things like behavioral intent (in this case, intent to stop drinking), attitude changes, skill development, or lifestyle modifications. The criteria for judging these aspects of the evaluand can be set a priori or after gathering data. Qualitative methods are perfect for maintaining a wide aperture of inquiry as we determine the dimensions of value for these non-obvious criteria.

One recurrent experience I’ve had during my evaluations is that the initial benchmarks (usually set a priori to secure funding) did not take sufficient accounting of local conditions. For example, a school-based program might set a target that it will reach 1000 students in local schools in its first year without taking into account the fact that similar programs already exist in the district. When teachers and schools hear about the new program, they say “Oh, we already have one of those, they were here last month.” Even the most cursory qualitative study of local conditions could have alerted us that this would be a problem and we could have revised our benchmark accordingly.

The reason that investigating benchmarks is a high-leverage strategy for qualitative methods redounds to my remark above: this is the hardest problem in evaluation. A quant-only approach is very likely to miss something and this is the place where we can least afford to do that. One qual-to-quant workflow for setting benchmarks is implemented in my Symmetric software, which converts natural language statements from stakeholders into probability distributions with the option for amplifying expert opinions.

#3. Constructing Measures

I am so intrigued by the potential of using qualitative methods to help construct valid measures that roughly half of doctoral dissertation concerned this topic. The status quo in constructing measures is not good. A very typical workflow is that you ask a “survey expert” to construct a measure of x and they start writing out a long list of items in their favorite format – 80% of the time this will be a Likert scale regardless of whether this is appropriate – and then they will whittle down this list to a few key items. If you’re lucky, the survey expert will pilot the long list of items, do exploratory factor analysis or principal components analysis, then deliver you a shorter survey with an acceptable reliability measured by Cronbach’s alpha. Within psychometrics, this is not considered to be “best practice” by any stretch but it’s still the most common approach. The result is that a lot of professionally created surveys are confidently measuring nothing. If you are an organization that paid for a survey to be developed in this way, there is every chance you wasted your money.

An alternative to this workflow would be to start with qualitative methods, gathering the types of information that would be helpful before you begin authoring the actual instrument, then use qualitative methods to help validate the measure. Psychometric methods are still involved, but they are used in a mixed methods framework, since they can’t answer all our important questions on their own. For example, we can use qualitative methods to address questions like:

Is the construct meaningful and recognizable to members of the population?

What are the salient dimensions of this construct for participants? What parts of it are they consciously aware of?

Do participants experience the construct in roughly the same way? To what degree? What subgroups of participants are likely to emerge?

What are the indicators of different levels of the construct among the population?

What sources of construct-irrelevant variance will likely interfere with our measurement efforts?

What task formats will be most appropriate for this population and reduce reactivity to an acceptable level?

By addressing these questions, we can actually satisfy the philosophical requirements of measurement instead of hand-waiving about assumptions. Statistical methods can weigh in on some of these questions, but qualitative methods are more direct.

Once we have an instrument we would like to test, we can return to qualitative methods again for cognitive interviews. Cognitive interviews ask participants to engage with the task and think aloud as they do, providing rich validity evidence about the response process that people use when they respond to our questions. Although one cognitive interview approach is to treat items with a simple dichotomous good/bad scale, we can also take a more open-ended approach to analysis and try to continue learning about the construct via the interviews.

The reason that using qualitative methods in the constructing measures step is a high leverage strategy is that is greatly increases the validity of your tailored measures. Detailed qualitative work at this stage pays off in spades later. When I’ve had the time and budget to go deeply into qualitative work on constructing measures I’ve been able to build strong predictors of actual performance. At the end of the evaluation, you will be able to answer the question: “How do we know this survey is measuring anything?” using a corpus of qualitative data.

#4. Qualitative Performance Scoring

Direct qualitative performance measurement takes us back to my example of the restaurant critic above. She is using all her senses to make qualitative appraisals of the evaluand. In my imagination, she has a standard rubric that she uses for her job, with criteria like Ambiance, Originality, and Taste. During her session she is rating the performance of the restaurant on each of these criteria, comparing to her standards. Her ability to do this relies on her personal judgment, training, experience, and body. It is not necessarily an indicator of bias if she disagrees with other critics, and may in fact be evidence of her expertise, depending on the circumstances.

Qualitative appraisals of the performance of an evaluand can also be undertaken by non-experts. Interviewing participants or consumers as they experience the evaluand or just afterward is the foundation of user research. In some of my ongoing evaluations, I have asked participants permission to reach out to them by phone for direct conversations about their experiences in social programs in exchange for a good incentive. My team randomly selects a few participants and elicits their judgments about the programs in an open-ended format. I don’t bother most of the participants, but these 15-20 minute phone calls with just a handful have been enough to put us onto the scent of several positive and negative areas of performance that we would not have discovered otherwise.

Qualitative performance assessment can be difficult. This is why we have rubrics. Rubrics serve multiple functions. First, they set our major criteria in advance, which helps maintain focus. Second, they help us encode complex information about performances while the information is still fresh in our minds. Rubrics are meant to be used during or immediately after observing the performance. In Symmetric, I’ve made a tool that allows you to create, score, save data, and export score data from rubrics. This grew out of a tool that I developed for myself because I needed something that could quickly spin up a clickable rubric on my computer – now I use Symmetric to do this all the time.

Direct performance assessment is a high-leverage strategy for qualitative methods because it creates some of the most credible evaluation data possible. In product evaluation, this is “the voice of the customer.” In program evaluation, this is the kind of direct and unvarnished feedback that staff and leadership need, but which only outside evaluators can reliably get.

#5. Prior Elicitation

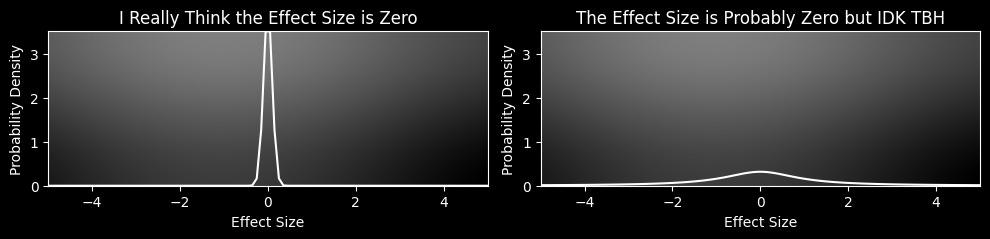

Prior probabilities are the degree of credibility we give to hypotheses in advance of seeing new data about them. For example, suppose I have a study that tests whether some humans can move small objects with their mind – what probability would you give that this study shows people can do this, in advance of seeing the data? That probability is a prior probability, or simply “prior.” Priors are important because they represent our current knowledge state before taking in new information. When we are very convinced of something, our priors are steep and spiky; when we willing to accept a wide range of possible answers, our priors are gentle slopes or even flat.

If we know in advance what kinds of statistical tests we are going to run in our evaluation – and we should – we also know in advance what parameters we are ultimately going to be making inferences about. For example, maybe it’s all going to come down to an effect size calculation at the end of the project. Maybe what we really want to know is how many people end up in back in jail.

If we have knowledge about these parameters, we can formalize this knowledge as a prior and include it in our model. The knowledge can come from community consensus, experts, or previous evaluations. Qualitative methods are what allow us to gather this prior knowledge, which is a process called “elicitation.”

Elicitation interviews, like cognitive interviews above, require special training for the interview. Also, like cognitive interviews, elicitation interviews require us to quickly train participants in how to respond. Elicitation interviews are highly structured and can even be assisted by special software packages to help participants visualize the information they are telling us. When an elicitation interview is complete, the participant has told us what they think the most likely values of the parameter are, as well as the distribution of those values. Once we have learned this information, we can mathematically pool the distributions of several participants or group them interesting ways, such as distinguishing between the views of skeptics and boosters.

In Bayesian statistics, it is possible to place a prior on virtually any parameter - means, error terms, betas coefficients, effect sizes. What this means in practical terms is that we can use whatever knowledge we are able to qualitatively access in order to improve our models.

The reason that prior elicitation is a high leverage use of qualitative methods in evaluation is that it makes our models maximally informed by the available evidence. It also offers a way for stakeholders to register their predictions about what will happen in the evaluation, helping you to calibrate the way you communicate results.

The Usual Qualitative Approach

When I’m brought on to do a new evaluation, one of my first tasks is to read the previous reports written by other evaluation teams. I do this for several reasons: to avoid telling the stakeholders information that they’ve already heard before, to build on aspects of the previous evaluations that worked, to get a history of the project. Reading the qualitative portion of the evaluations done by others I am, I am sorry to say, usually disappointed. In my view, there are three major ways that qualitative methods go astray.

The most common issue with qualitative methods used in evaluations is that they are employed within a general research rather than an evaluation paradigm. While they may use good techniques like qualitative content analysis (QCA) or thematic analysis, their qualitative inquiry produces “topics” or “themes” that do not feed directly into any evaluative conclusions. This is because the logic of evaluation is being ignored. No criteria or standards have been laid down by which to judge whether the presence of a particular theme means that the evaluand is succeeding or failing.

For example, in the social programs that I evaluate, one common comment in open-ended fields on surveys is that the program should offer food. People want snacks. If there are snacks, people want “better snacks.” Is this a problem for programs that are not meant to offer food and which provide thousands of dollars in free services to a given participant in a given year? We need a way to decide. The answer depends on the criteria and standards we have for the program. For some programs this is a problem and for others it isn’t. On its own, sorting all the comments into piles and reporting on the contents of each pile doesn’t advance the evaluation. This is the case in formative evaluation as well, since we shouldn’t be open to changing everything about the evaluand, only to improving it. We would do well to think about the difference.

A second common issue with the use of qualitative methods evaluations is engaging in meaningless quantification. I’m talking about counting up occurrences of “codes” in a corpus or interviews or focus groups and writing about these counts as though they are inherently meaningful. They are not. To make qualitative coding meaningful, we need more structure up front. This is one reason why abductive coding is a good default. The fact that 12 participants in a series of 15 interviews said something that could reasonably be classified under the heading of “Access to Healthcare” a total of 28 times means… what exactly? Perhaps all the participants were saying something like “This community has wonderful access to healthcare” or they were saying “We have no access to healthcare” or a mixture of these or something entirely off topic. In a lot of qualitative evaluation research that I read, one gets no idea why codes are being counted.

Perhaps the most jarring use of meaningless quantification I see in evaluations is the implication that, because more than a handful of participants said something, it is probably true. Bats are not blind, it’s not illegal to shout “fire” in a crowded theater in the US, the Amazon does not produce 20% of the world’s oxygen, the phrase “rule of thumb” had nothing to do with corporal punishment, and the word “the” has never been pronounced or spelled “ye” in English. Most people believe many demonstrably incorrect things and it is the job of evaluators to figure out which of the things people say are true if we can. When we can’t, we owe it to the reader not to pass off participant beliefs as fact. Journalists don’t do this, evaluators shouldn’t either.

There are other problems we could discuss in standard qualitative methods in evaluation, but I’ll end on what I think is the strangest one: often qualitative methods being used are not actually answering any of the evaluation questions. Now, I don’t personally think that evaluation questions are a hard requirement3 for an evaluation study, but if we are using them to organize the study, then we shouldn’t be dedicating huge amounts of effort to collecting and analyzing data that doesn’t answer these questions. Answering well-formed questions posed to us by stakeholders is an aspect of what I’ve taken to calling methodological symmetry. Think of the general coding and theming approach I mentioned above – what evaluation question could that be answering? “What are the general categories of feedback we getting about the evaluand?” I’ve never seen a real stakeholder ask this question or anything close to it because it isn’t very useful information. A real stakeholder might ask something like “What are the areas in which the program could improve?” – but notice that the answer to this question is not raw count data of codes or themes. Once again, we need much more up-front structure to answer an essentially evaluative question like this.

Recall the title of Fisher’s 1925 book in which he popularized the idea of the p < .05 cutoff: Statistical Methods for Research Workers. These are techniques for the “worker” to prepare data for consideration by more advanced levels of reasoning. Calculate the p-value, put the fries in the bag.

Also, notice how little statistical reasoning would help us with this question. Qualitative reasoning is our primary method of reasoning about data. Someone who refuses to master qualitative methods because they “lack the rigor” of statistics is like someone who wants to use a power drill but can’t be bothered to learn to operate a screwdriver. (I think this analogy works because there are a lot of things you can do with a screwdriver that would break a power drill.)

We don’t need explicit evaluation questions for the simple reason that all evaluations have implicit questions: what is the merit, worth, or significance of the evaluand.